|

|

|

|

Printable

version |

|

The DCC Results (May 2009 - May 2010)

In the Database Competence Centre, a major focus

of work during the period May 2009 to May 2010 has

been the database release 11.2, also called 11gR2.

This new Oracle release introduces fundamental

changes which are of major interest for CERN

database usage. Studies were carried out on some of

the most innovative areas: Oracle Streams, Oracle

Automatic Storage Management, Oracle Active Data

Guard and Oracle Advanced Compression. In the past

months, new techniques have been developed for

Streams deployment, and virtualisation has been a

major area of progress, with close collaboration

with the Oracle Weblogic-VE team. Finally,

significant joint work was done on monitoring.

Oracle Database 11g Release 2

Within the CERN openlab collaboration, several

tests have been performed in the Oracle Streams

environment which is used for metadata replication

from Tier-0 to Tier-1 databases as part of the WLCG

framework. These tests were focused on the overall

performance of dataflow and new features introduced

by Oracle in the 11g release 2 version. The results

confirmed that the new concept of Oracle Streams

architecture called Combined Capture and Apply

provides a ten times more efficient replication than

the previous one. After fruitful collaboration with

the Oracle Streams project team, it was possible to

replicate roughly 40 000 record changes per second,

a rate never achieved before. Snapshots, process

dumps and test results were sent to Oracle for

further improvement of the product. Besides a more

than satisfactory data throughput performance, the

new release of Oracle Streams provides features

increasing the availability of the replication.

Intelligent management of multi-destination

replication helps to avoid bottlenecks and handles

downtimes of replicas. Administration of Oracle

Streams is simplified by introducing a new set of

packages for replication management and monitoring.

For data consistency resolution, Oracle came up with

a new feature called Compare and Converge. It

enables one to perform comparisons of data objects

from primary and replica databases. When

inconsistencies are found, the administrator can fix

them by running the converging procedure. The

results of the performance tests show that the

comparison of completely inconsistent tables can

result in the processing of two megabytes of data

per second on average. When the data are consistent,

it is eight times faster (i.e. 16 megabytes per

second). The convergence of data has an average

speed of four megabytes per second. Despite some

limitations, the Compare and Converge package is a

promising approach for the resynchronisation of data

using direct access to the data files of replicated

schemas. It might be useful in the case of

unrecoverable failures of media at the primary

databases. Therefore, CERN is really looking forward

to taking advantage of the Oracle Database 11g

Release 2 whose deployment to the production stage

is foreseen for next year. In addition, all streams

replication monitoring tools, such as Oracle

Enterprise Manager and in-house CERN monitoring,

have been updated and tested with the latest Oracle

database version in order to be ready for the

migration.

The new features of Oracle

Automatic Storage Management and Oracle ASM Cluster

File System (ACFS) have been tested. File system

tests were conducted using either local disks (RAID

1 – 500 gigabytes SATA) or SAN storage (three

storages over four gigabit Fibre Channel - dual

channel with multipathing - 16 SATA 400 gigabytes

disks each). A number of file systems were created:

ext3 on local disk, ext3 and ext2 on one ASM Dynamic

Volume Manager volume, and ACFS shared between two

nodes. A number of file operations were tested and

the time needed compared: large file creation and

deletion, parallel access, small file creation and

deletion as well as archive extraction. The results

of the tests were shared with Oracle. Oracle ACFS is

much faster than ext3 with comparable or less CPU

usage for most operations.

Oracle Data Guard is a key technology to achieve

high availability. It belongs to Oracle’s Maximum

Availability Architecture (MAA) best practices. A

Data Guard configuration consists of a primary

database and of one (or more) standby database

running on different resources. If the primary

database goes down, the standby database can be

activated as the new primary database within a few

minutes, thus significantly decreasing the planned

and unplanned downtime. In 2009, all critical

databases of the LHC experiments deployed a Data

Guard setup and some even benefited from activating

the standby database as the primary, hence

minimizing the impact on the service. Based on the

very positive experience with Oracle Data Guard, the

LHC experiments are very much looking forward to

using the Oracle Active Data Guard feature available

in 11g, which was thoroughly tested in openlab. When

using Oracle Active Data Guard, the physical standby

database can be opened in read-only mode and the

recovery process

restarted, so the standby will be in sync with the

primary and can be used for reporting. This, with

the option to take fast backups from the standby,

can reduce the load on the primary considerably. The

creation of a standby database is easier in 11g

thanks to the new Oracle Recovery Manager command

which was successfully tested. Real-Time Query

performance and long-term stability proved to be

very encouraging. The configuration, maintenance and

monitoring using the Oracle Data Guard Broker are

very promising. Thanks to the ease of deployment,

performance and monitoring and its features, Oracle

Active Data Guard will replace Oracle Data Guard

deployments of all critical LHC databases.

Worldwide

replications using Oracle Streams

Oracle Streams is the main replication technology

used for LHC data distribution. From CERN, the data

are distributed (using Oracle Streams) to ten sites

around the globe (Tier-1 sites), enabling a highly

complex replication environment where the ongoing

maintenance presents a variety of challenges. One of

the main problems is the Streams resynchronisation

after a long downtime at one of the destination

sites. After five days, the archived log files,

where the database changes are logged in, are

removed from the primary database. At this point,

the defined synchronisation window is exceeded:

archived log files recovery from backups is costly

and the sustainable replication rate might be

surpassed (Streams processes might not be able to

recover the backlog generated during the

intervention). The unique solution is to do a

complete re-instantiation of the replica site.

However, the data transfer (schemas and tables)

using

the Data Pump utility may take days depending on the

amount of data to be transferred and the capacity of

the destination site. Within the openlab framework,

a new procedure to perform a complete Streams re-instantiation

of a destination database (out of the replication

flow) was developed using transportable tablespaces

to copy the data from another replica and minimise

the impact of the operations on the source database.

When a complete re-instantiation

of a replica site in a Hub/Spoke Streams environment

is needed, the use of transportable tablespaces (in

order to resynchronise the replica database) saves

time and speeds up the process thanks to its

flexibility.

Virtualisation and monitoring

Within the context of

openlab, significant work on virtualisation has been

completed and two versions of Oracle VM (2.1.5 and

2.2) have been packaged and made available for

automatic installation and central management. The

development of these two versions, following input

from CERN and following initial openlab evaluations,

has been crucial as it enables the use of Oracle VM

in large-scale environments; the package can be

installed and configured quickly and without human

interaction.

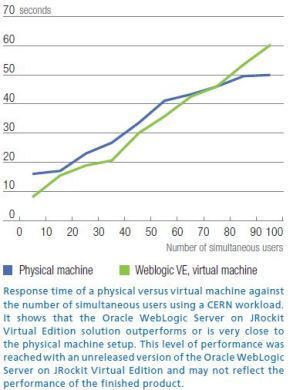

The

team has also participated in a series of tests

looking at the Oracle WebLogic Server on JRockit

Virtual Edition, which is a special version of

Oracle WebLogic Server. The deployment benefits for

the organisation are impressive as this solution

significantly simplifies the maintenance of the

middleware solutions and provides cost-effective

scalability on demand as it runs without a guest

Operating System. It was possible to deploy

successfully two Administrative Information Services

(AIS) applications taken as models because of their

complexity and the intensity of their workload. It

gives the team confidence that any AIS application

can be deployed. Stress tests were also performed

comparing the physical machine and the virtual

machine, with an even better performance obtained in

the virtual machine in some situations. This level

of performance was reached with an unreleased

version of the Oracle WebLogic Server on JRockit

Virtual Edition and may not reflect the performance

of the finished product. The

team has also participated in a series of tests

looking at the Oracle WebLogic Server on JRockit

Virtual Edition, which is a special version of

Oracle WebLogic Server. The deployment benefits for

the organisation are impressive as this solution

significantly simplifies the maintenance of the

middleware solutions and provides cost-effective

scalability on demand as it runs without a guest

Operating System. It was possible to deploy

successfully two Administrative Information Services

(AIS) applications taken as models because of their

complexity and the intensity of their workload. It

gives the team confidence that any AIS application

can be deployed. Stress tests were also performed

comparing the physical machine and the virtual

machine, with an even better performance obtained in

the virtual machine in some situations. This level

of performance was reached with an unreleased

version of the Oracle WebLogic Server on JRockit

Virtual Edition and may not reflect the performance

of the finished product.

Thanks to the close collaboration

between CERN openlab and Oracle’s Enterprise Manager

team, it was possible to do extensive beta-testing

of the newest release -10.2.0.5 which led to a

seamless upgrade of the production monitoring

system. The team continued to centralise and

standardise monitoring of the infrastructure by

relying on the new features of Oracle Enterprise

Manager instead of legacy monitoring solutions.

Focusing on the following features was of great

benefit to CERN: User Defined Policies and Metrics

were investigated and used for implementing new

monitoring rules, which are specially adapted to

CERN requirements and complement the out-of-the box

policies already used. Oracle Enterprise Manager

Beacons is a new feature used to monitor

service-based availability. Databases, hosts,

listeners and application servers are grouped

together to form Systems. Services are represented

as a set of user-defined tests configured to run

against System’s components. These tests run from

different locations thus evaluating service

availability from the user perspective. The

Beacon-based service monitoring is now in

pre-production for some major CERN applications like

Engineering Data Management System, Administrative

Information Services, Software Version Control, etc.

All of these results have been published and were

presented at Oracle OpenWorld in San Francisco in

October 2009 and the United Kingdom Oracle User

Group conference in Birmingham in December 2009.

|

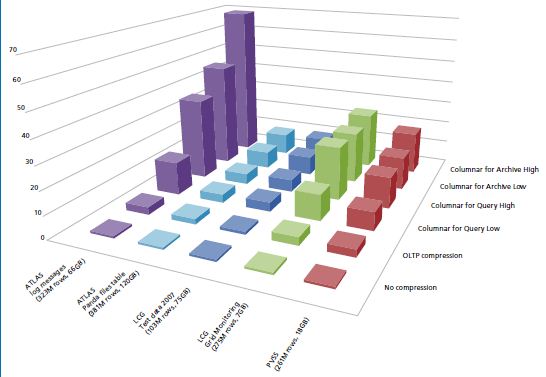

Test of the Advanced Compression

Option with Oracle Exadata

The

expected growth of the LHC

experiments databases is roughly

20 terabytes per year per

experiment. They need to have

all data available at all times,

not only during the experiment

lifetime (10−15 years), but also

for some time afterwards, as the

data analysis will continue. To

meet this need it is necessary

to provide an efficient way of

accessing and storing the data

petabytes which is mostly

read-only. The answer to this

challenge could be the

compression available in Oracle

Database 11g Release 2 on the

Database machine. Tests were

performed to validate the

hypothesis. The system used was

located in Reading, UK, and

accessed remotely from Geneva.

It consisted of four nodes and

seven storage cells with 12

disks each. The tests focused

mainly on OLTP and Hybrid

Columnar Compression (EHCC) of

large tables for various

representative production and

test applications used by the

physics community, like PVSS,

GRID monitoring and test data,

file transfer (PANDA) and

logging application for the

ATLAS experiment. Tests on

export datapump compression were

also performed. The test results

are impressive as the following

compression factors were

achieved: 2−6X compression

factors with OLTP and 10−70X

compression factors with EHCC

archive high. The EHCC can

achieve up to 3X better

compression than tar bzip2

compression of the same data

exported uncompressed. Oracle

Compression offers a win-win

solution, especially for OLTP

compression as it shrinks the

used storage volume while

improving performance.

Compression factor for

physics database applications

using 11gR2 compression. The X

axis represents the selected

applications and the Y axis

represents the different

compression types, the Z axis

represents the compression

factor.

|

|

|

|

|